The Accessibility Scotland 2019 theme was Accessibility and Ethics. In “I, Human” Léonie Watson @Tink uses the Three Laws of Robotics to explore what it means to be a human with a disability, in a world of AI and smart technologies.

The Accessibility Scotland 2019 theme was Accessibility and Ethics. In “I, Human” Léonie Watson @Tink uses the Three Laws of Robotics to explore what it means to be a human with a disability, in a world of AI and smart technologies.

How are smart technologies changing the way disabled people interact with the world? How do we design conversational interfaces with AI?What are the challenges we face as AI and smart technologies become ever more present in our lives?

Video

Slides

I, Human slides in HTML format.

Transcript

Good morning, everyone.

It’s great to be here with you all in Edinburgh.

As Kevin said, I’m going to use Asimov’s three laws of robotics as a hook to talk about what I think it’s like to be a human.

In particular, a human with a disability in a world of robotics and perhaps noticeably for our times, artificial intelligence.

It might surprise you to know, though, that this is something that we have been thinking about, certainly imagining, for a great deal longer than you might think.

We can go back to the third century, and there is a book called The Argonautica.

You may well have heard of Jason and the Argonauts as a troop of adventurers from Greek mythology.

And one of the stories in one of the chapters of this book, Argonautica, describes a bronze robot, for want of a better word.

A very enormous being that patrolled the shores of the island of Crete.

And if it saw anybody coming in in a rowing boat or other vessel that it thought looked a bit suspect or maybe a challenge or a danger to the island of Crete, it would start chucking boulders at them.

And so perhaps we have our first example of an automaton, a robot, with some degree of artificial intelligence as a protector or even, perhaps, a weapon.

We can go to the eighth century and look at the story of Metamorphosis by Ovid.

In this, a statue maker was on his own in life, and he created a statue of a woman, and he fell in love with the statue of this woman.

He thought she was the most beautiful thing he’d created and, with a little bit of Greek mythology and magic from the gods, when he kissed the statue, she came to life and became an automaton.

Again, an entity that had some degree of intelligence and capability to exist in a human world and interact with humans.

And perhaps this is our first example of seeing artificial intelligence and artificial life as a form of companionship, perhaps even love.

But we won’t go there for that talk.

If we go a little back further, we have Mary Shelley’s book Frankenstein in the 1800s.

This one, of course, is very well-known in popular fiction, and it explores the idea that we can recreate or create life using electricity as the fuel to spark life into action.

And in doing so, Frankenstein created the monster, as it’s often referred to in this particular case.

But again, something that was able to roam around, think to herself, albeit in this case to a limited degree.

But there we have, perhaps, one of the clearest examples of humans and their fear of things that have life and intelligence where they probably didn’t really ought to have done.

There’s also a book by Samuel Butler, called Erewhon, which is a slight fudge on the word “nowhere” in reverse.

And in this book, he wrote about the possibility that machines could also learn like human beings learn, by a process of elimination and abstraction.

He was pretty much laughed out of any literary club you could mention at the time.

People thought him absolutely ridiculous, but of course now, we have machine learning.

We have complex neural networking systems that are very capable as machines of learning in very similar ways to the ways that humans have always done.

And then in 1950, we come to Isaac Asimov and his story I, Robot.

And in it, he describes again what has become one of the classic questions about the interaction between artificial life and intelligence, and humans, and the decision we make and the reasons behind the choices that we make when presented with different, often difficult, things that we can choose from.

And in his book, in the year 2058, there is a manual of robotics and this is where we see the three laws or robotics put into print for the first time.

I’m going to take each of these three laws now and use them as a way to explore some of the realities around what it’s like being a human in this world.

But it’s interesting to note that Asimov said of these three laws that he thought they were only logical way that a human could rationally interact with robots.

But then when he says, he remembers saying that and then remembers that of course humans are almost always not rational themselves.

And this is very true.

We’re not. We’re not in the slightest bit rationally.

We’re not even a little bit sensible a lot of the time.

We’re extremely complex entities.

We arrived on the scene in the form of homo sapiens about 300,000 years ago, give or take the odd 100,000 years depending on which source you believe.

But to all intents and purposes, this is when humankind as we more or less know it today started to emerge onto the planetary scene.

It was about 50,000 years ago that we have a time that started known as humanity modernity, and this is when a more recognizable form of ourselves started to emerge.

The form that was responsible for the cave paintings that we know exist in different countries around the world.

The time when we started to use music and abstract thinking to hunt in ways that were more intelligent, more collaborative than they had previously been.

In other words, it was a time when we started to use a lot of the sense and capabilities that we have at our disposal in ways that were somewhat different to everything we had done before.

And human intelligence is largely comprised of these things. It’s our ability to see, our ability to hear, to speak, and to think.

There’s more to it than that, of course, but these four things feed into pretty much everything we do and always have done.

Seeing, for example, uses about 30% of our brainpower.

A staggering amount. Unless you’re like me, of course.

I’ve got 30% of my brain sound asleep somewhere, apparently.

But a lot of visual processing goes on. There’s about a million visual fibres in each visual cortex that you’ve got.

There’s a whole chunk of your brain devoted to what, if you’re able to, you can see.

If you want a good example of how complex this process can be, on the screen, there is something of an optical illusion.

It’s a checkerboard, some black and white squares, and there are two squares labelled “A” and “B.”

One of them is in the shadow of a cylinder at the back of the checkerboard, and to all intents and purposes, visually, they look like they’re different colours.

They’re not, actually. If you go in and examine the true colours as they’re displayed, those two squares are absolutely identical, but the optical illusion is enough to throw our visual processes a bit of a wobble, and our minds are convinced that those two squares are different colours.

Seeing, of course, isn’t something everybody gets to do.

You could be like me, completely blind. You might have low vision. You might also have other conditions like colour-blindness, temporary conditions like a migraine.

There are all sorts of reasons why, as humans, our frailty in terms of seeing can be manifested.

Hearing uses a bit less of our brain power, about 3% according to the scientists. But still nonetheless, it’s something that we are doing pretty much constantly whether we’re aware of it or not.

And it too is responsible for quite a lot of complex processing.

I’m going to play you a clip from a Beatles song, Hey Jude. The lyrics are on screen. And if I play it in the original, it’ll sound very familiar to you.

(Plays audio file of Hey Jude in minor key)

Singing

But if we change it to a minor key and play it again, the emotional processes that are triggered in response to listening to it are really quite different.

(Plays audio file of Hey Jude in major key)

Singing

And so, just for a change in one sound characteristic, a song that is typically known as being reasonably uplifting has become very downbeat and a little sad and a bit wistful.

As I say, just for the change of one audio characteristic.

But of course, as humans, not all of us get to hear things like that. People are sometimes born deaf.

They’re hard of hearing, either temporarily or permanently, but to various degrees, but may not be able to hear as well as other people are able to.

Speaking.

Speaking uses a lot of our brain as well, 50%.

Humans are great at speaking. We don’t often say very much, but we talk a great deal. And that’s absolutely fine too. It’s a good way to communicate.

We can do extraordinary things with speech.

We can comfort. We can amuse. We can scare. We can tell stories of times past and days of glory.

It’s Halloween coming up and you think of all the tales of ghouls and ghosties that are being told now.

We can really do amazing things with the capability to speak.

Even if you think of something as simple as saying “hello” to someone, can be remarkably complex when you bring in the whole world.

(Plays audio file of greetings in different languages).

Hello! How are you? G’day! Hey! What’s the crack? Hola! Olà! Como esta? Xin chào! merhaba! Marhaba! salamaleikum! bonsoir! Bonjour! Hallo! Ciao! konnichi wa! namaskara! namaste! Sawasdee! Sawasdee khaa! Oi! Salaam!…

And there you have it. How you say “hello” in a tiny fraction of the languages that we speak around the world.

We speak hundreds of languages and there’s perhaps 20 in there.

And that’s just a simple greeting.

“Hi. How are you doing? What’s going on in your life at the moment?”

So speaking is a very complicated thing. And of course, not everybody gets to do that.

There are many people, for a variety of reasons, who are unable to communicate through speech, either because they have a condition that makes speaking itself, as in forming the words and the physical manifestations, vocal cords, et cetera, don’t work properly.

Also, people who have hearing disabilities.

I’ve met people who are profoundly deaf who find it difficult to articulate and communicate very clearly using speech.

There’s a whole bunch of reasons.

A sore throat, of course, being another one, why temporarily or permanently, we might find ourselves in the position of not being able to use that particular bit of our capabilities.

So to bring it back to artificial intelligence, we return again to the 1950s and a paper by Alan Turing.

And in this paper, he speculated about the idea that, essentially, the human brain worked on electrical impulses.

So again, a hark back to Frankenstein and the electricity and the lightning that powered the monster.

But Turing quite seriously thought that the way the brain worked was very much like an electrical network.

It fired signals and pulses and synapses in the case of the human brain, and that was how the functionality of our brain worked.

And that really started people thinking quite seriously about the possibility of artificial intelligence.

In 1956 at Dartmouth College, there was a workshop and the theme of this workshop was all about whether we could just define human characteristics and behaviours and processes so clearly in so much detail that we could actually recreate them in machine terms.

Quite a remarkable thing to propose, but again, it fed into this idea that perhaps we could start to artificially recreate some of those human capabilities that make us unique in the animal world.

So our laws of robotics.

Our first law is that a robot may not harm a human being, or through its inaction, allow a human being to come to harm.

Which is all very well, but to be able to do that, to protect a human counterpart, the robot has got to be able to understand something of the world around it.

And that means, for one thing, it’s got to be able to see the world around it.

Image recognition is what it’s commonly known as, and it’s basically the process of taking an image and not only being able to identify what’s in it, but having some means of understanding and processing the data that’s contained within the image.

If we go back to 1976, they estimated that to do this, to simulate retinal behaviour would take something like a thousand million instructions per second, MIPS, for a computer.

Now, this was at the time when the fastest computer on the planet, a Cray-1, was only capable of about 80 to 100 MIPS.

So the processing power was nowhere near good enough to really simulate the human ability to see.

By the time we got to 2011, we did have the capability and we also had the average …

Sorry. We had an average of many more, about 10,000 and a million MIPS was what the average image recognition application was taking. So processing power was a huge piece of the puzzle.

Something else came into play, though, in the 1980s, there’s something known as a Moravec’s puzzle.

And he basically says that the tricky thing with all of this is that the really complicated stuff is easy. It’s the really simple stuff that’s really damn difficult.

And when I first read that, I thought,

“Well, that’s crazy. How can simple stuff be harder than the complex stuff?”

But he’s absolutely right.

On-screen, there’s a picture of a chair.

It’s a fairly standard chair. It’s a wooden chair. It’s got the usual back that you sit on, some legs, and most people familiar with how a chair looks would pretty much immediately identify it as a chair.

There’s another chair on the screen now.

I suspect most of you were able to identify as a chair too. It’s a camping chair.

It’s really low to the ground. It’s made of canvas and a bit of tubing for a framework.

You kind of sit in a little sort of canvas nest, if you like, so it bears really no visual resemblance to the wooden chair with the legs in the back that we would expect, but as humans, we’ve quite quickly been able to go,

“Yeah, that’s a thing you sit in. We’ll call it a chair.”

Computers really struggle with this.

And then there’s one more. The one on-screen now, again, is perhaps pushing human capability to its extremes.

It’s shaped a bit like a cotton reel, an old-fashioned cotton reel standing on its end, so you sit on the top and it sort of goes in towards the centre of its upright bit and out again.

But the bottom is kind of like a ball so you wobble around on it when you’re sitting on it.

Now again, most of us, I suspect, seeing that picture would have gone,

“Yeah, okay. Wouldn’t want to sit on it. But technically, we’ll go with it. It’s a chair.”

But from a computing point of view, no rules to this.

We can’t say,

“a chair has a back and a thing you sit on and four legs,”

because that wouldn’t have applied to the second two examples.

We can’t say that a chair has a back because that wouldn’t have applied to the third example.

So all the rules of being able to identify what something actually looks like completely go out of the window, but yet to humans, this is a reasonably simple thing to be able to do.

In 2006, Eric Schmidt from Google came up with a big piece of the puzzle, and that was cloud computing.

This gave us access to the kind of processing power that was really necessary to take us from the early days of retinal simulation through to that 2011 example of 10,000 to a million million instructions per second needed for this kind of a thing.

Up until the point where we got to cloud computing and we could have applications running in the cloud that could flex and consume as much processing power as they really needed to do so, we struggled to do a lot of this image recognition capability.

But with the advent of that, we started to get some things coming our way.

In 2016, Facebook introduced image recognition, so if someone uploads a picture now to Facebook, the image recognition that they use will automatically provide a text description.

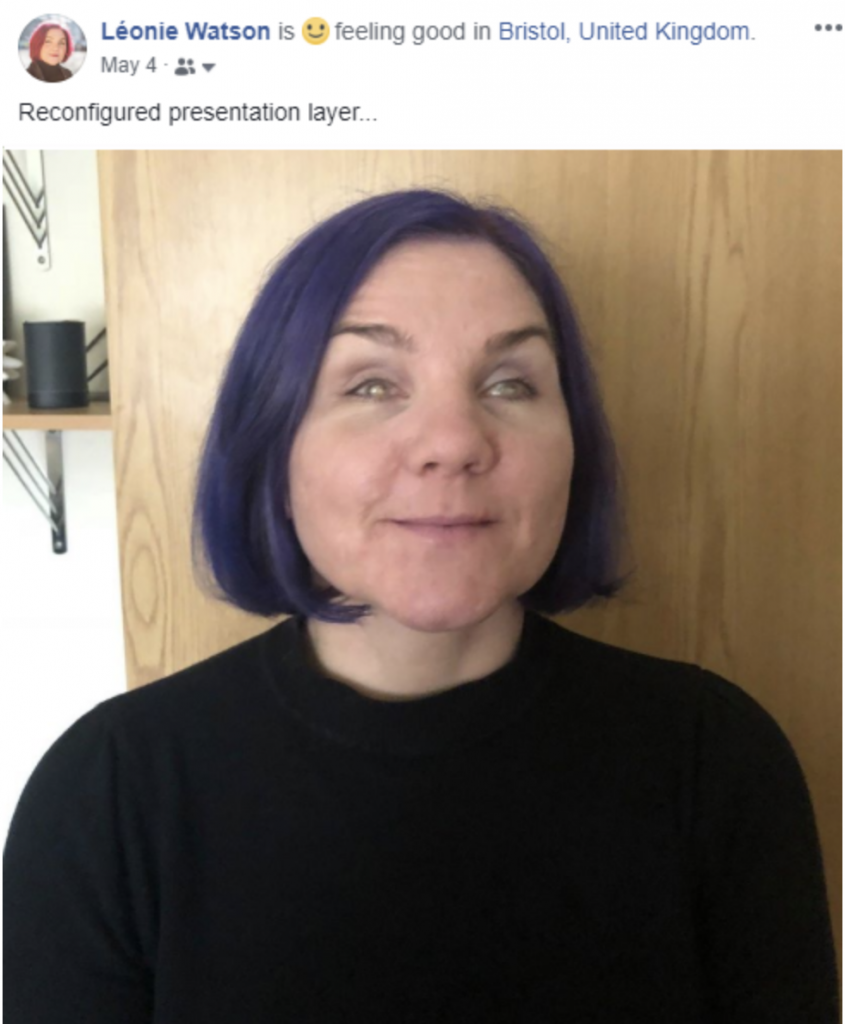

There’s a picture on-screen of me the first day I dyed my hair this purplish colour, and the text that’s produced by the Facebook image recognition says it’s a close-up of a person in a room.

Now, that’s not perfect.

Doesn’t describe the fact that I had different colour hair from the last picture I posted to Facebook at the time, but it’s not bad, and it’s a hell of a lot better than what used to be there, which would have been some garbage string of characters that would have meant nothing to me whatsoever.

So, first real taste of how useful to a human with a disability image recognition can be.

In 2017, Microsoft stepped up and they released an app for iOS (Seeing AI) that is incredibly versatile and useful to people who are unable to see, because it uses image recognition technology.

(Plays Microsoft Seeing AI promotional video)

Seeing AI is a Microsoft research project for people with visual impairments.

The app narrates the world around you by turning the visual world into an audible experience.

Point your phone’s camera, select a channel, and hear a description.

The app recognizes saved friends.

Jenny near top right, three feet away.

Describes the people around you, including their emotions.

28-year-old female wearing glasses, looking happy.

It reads text out loud as it comes into view, like on an envelope.

Kim Lawrence, PO Box …

Or a room entrance.

Conference 2005.

Or scan and read documents like books and letters.

The app will guide you and recognize the text with its formatting.

Top left edge is not visible. Hold steady. Lease agreement. This agreement is …

When paying with cash, the app identifies currency bills.

20 US dollars.When looking for something in your pantry or the store, use the barcode scanner with audio cues to help you find what you want.

Campbell’s tomato soup.

When available, hear additional product details.

Heat in microwave bowl on high …

And even hear descriptions of images in other apps like Twitter, by importing them into Seeing AI.

A close-up of Bill Gates.

Finally, explore our experimental features like scene descriptions to get a glimpse of the future.

I think it’s a young girl throwing a Frisbee in the park.

Experience the world around you with the Seeing AI app from Microsoft.

So it’s an extraordinary bit of technology that’s available for free that we can carry in our pockets on our phones.

I did go off it slightly the day it decided I look like a 67-year-old woman, but oh well. Can’t win them all, can we?

But really, I mean, how amazing that we, with a bit of artificial intelligence, image recognition, and some cloud computing have this ability in our pockets.

In 2018, Apple introduced Face ID, because tying image recognition into another one of the things that humans sometimes aren’t always able to do is speaking.

Face recognition was intended just to make things more convenient for humans generally, but of course, if speaking to your device is complicated or if mobility is a complication, so entering passwords and pin numbers is a problem, then using this ability for artificial intelligence to see is another really good thing to have.

(Plays FaceTime promotional video)

Upbeat pop music playing

So that takes us to our second law.

The robot must obey orders given to it by a human being, unless obeying that order would somehow break the first law.

So this is where we get into the territory of needing to be able to communicate with our robots and artificially intelligent devices and applications.

And that, of course, is a two-way process.

The thing needs to be able to hear us, to understand what we’re saying, and translate that into text that can be then used to form the basis of the actual processing of whatever your instruction or command is.

In 1993, Apple were one of the first to bring speech recognition, the ability for a device to hear what a human was saying and do something useful with it to the market.

(Plays Macintosh speech recognition promotional video)

Macintosh, open letter.

Macintosh, print letter.

Macintosh, fax letter.

While everyone is still trying to build a computer you can understand …

Macintosh, shut down.

… we built a Macintosh that can understand you.

Good bye.

By the time we get to 1997, Dragon NaturallySpeaking was remarkably popular, a speech recognition tool that’s today used by many people who find it difficult to interact with technology using the mice, keyboard, tap or other physical forms of interaction.

It’s also used professionally by lawyers and doctors who need to be able to do dictation in environments where their hands are otherwise occupied with their professional activities.

(Plays Dragon NaturallySpeaking promotional video).

This is Dragon NaturallySpeaking, speech recognition software that turns your voice into text.

Three times faster than typing with up to 99% accuracy.

Now, most of our communication with technology has improved.

We don’t have to do that punctuation thing, unless you happen to use something like SIRI to send text messages, which I do, and you end up talking like this.

I actually left somebody a voicemail not long ago, where I ended up talking to them like that, because for a moment, I forgot I wasn’t dictating a text message, I was leaving them a voicemail.

So, yeah. Maybe that just says more about me than the thing.

But we have much improved ways now for us to be able to talk with various types of technology and for it to be able to hear and understand us.

In 2013, something came along in the form of kinetic translation.

(Plays Kinetic Sign Language Translation video).

This brings in the idea of speech in the form of gestures, sign language specifically, and uses AI to translate into other forms of sign language, but also into other forms of human speech and language.

This project is a sign language translator. It translates from one sign language to another. It helps the hearing and the deaf communicate.

You can communicate between American Sign Language and Chinese Sign Language, or potentially any sign languages to any other natural language.

They connect or capture the sign language, and then recognise the meaning of the sign language, including the posture, the trajectory, and then there’s an automatic translation into spoken language.

I really like this.

So here we’re using AI with image recognition capability, speech recognition capability, gesture recognition capability, a whole bunch of recognition capabilities, to enable humans across boundaries, if you like, a lot more readily than they were able to before.

We then come onto speaking, the other half of this. Because obviously, if we’re going to give something an instruction, we want our robot to be able to respond to us in some way.

So there needs to be a way for it to respond by translating its data, its text into synthetic speech, text-to-speech translation.

This goes back a long, long way. Wolfgang von Kempelen came up with a speaking machine. He actually recreated, effectively, the lungs and vocal tracts and a whole bunch of different pieces as an actual mechanical device.

And rumour has it, or reports have it that it actually was capable of producing some form of human speech. I would imagine fairly crude speech, pretty rough around the edges. But again, turns out we’ve been thinking about this stuff for a lot longer than you might think.

1931 gives us our first working example, reported example of this at the World’s Trade Fair, and something called the Voder Talking Machine.

(Plays Voder talking machine video).

Speaker 1: The machine uses only two sounds produced electrically. One of these represents the breath. The other, the vibration of the vocal cords.

Speaker 1: There are no phonograph records or anything of that sort, only electrical circuits such as are used in telephone practice.

Speaker 1: Let’s see how you put expression into a sentence.

Speaker 1: Say, “She saw me,” with no expression.

Machine voice: She saw me.

Speaker 1: Now say it in answer to these questions.

Speaker 1: Who saw you?

Machine voice: SHE saw me.

Speaker 1: Who did she see?

Machine voice: She saw ME.

Speaker 1: Did she see you or hear you?

Machine voice: She SAW me.

Which is kind of really quite remarkable, considering how old that is.

For anyone who was reading the captions there, I apologise for using caps to show the emphasis on the different words in the sentence.

I did a bit of questioning and found that there are any number of different ways of showing emphasis in captioned sentences, and I didn’t have formatting capability to do italics, which is the other, I believe, way of doing it.

So I had to resort to caps, which I know can sometimes mean shouting. I’m not shouting at you. I’m sorry. So, just a quick apology there.

In 1961, we move things forward a bit. IBM, and they did the one thing of course that we all always want to do as soon as we can make anything talk, and that’s make it sing.

(Plays audio recording of machine singing).

Machine voice singing

Which is the other thing you got to love about humans, our ability to do the completely ridiculous with millions of dollar’s worth of technology at our disposal.

It’s awesome.

Speech synthesis didn’t really move on a great deal from those days.

That is a form of speech synthesis known as formant synthesis. It’s very robotic. It’s very quick. It’s very responsive, but it’s formed entirely artificially.

The engines that render this speech do not have any actual, real human content at all.

So if I play a sound clip of the text that’s on screen, you’ll hear just how artificially robotic it sounds.

(Plays audio recording demonstrating Formant synthesis).

For millions of years, mankind lived just like the animals, then something happened that unleashed the power of our imagination.

We learned to talk.

So there’s no real intonation, no cadence, no pitch change.

Nothing, really very interesting there. More recently, we had something called concatenative synthesis, and this is where they recorded a human voice and then broke it down into very, very small chunks, into phonemes and other, much smaller than syllable chunks.

And these engines, when they render speech, actually rebuild the text out of those tiny little chunks.

It means it’s not quite so quick, not quite so response, but it is marginally at least a little bit more human sounding.

(Plays audio recording demonstrating Concatenative synthesis).

For millions of years, mankind lived just like the animals, then something happened that unleashed the power of our imagination.

We learned to talk.

So it’s a little bit better.

Google’s DeepMind did a lot of work, and they came up with something called parametric synthesis.

Now, this is entirely artificial again, but it’s based on a statistical model rather than the ways that the formant synthesis engines work.

And of course, when they released some examples, what did they do but Bruno Mars?

(Plays audio recording demonstrating Parametric synthesis)

(Machine singing)

But it’s remarkably human-sounding.

It took me, and I listen to text-to-speech driven things all the time every day, it took me a moment to think,

“Really? Okay, wow. That’s a bit of an improvement.”

So, we’re getting better in the quality that gets output.

Then in 2013, of course, we got the Amazon Echo, and we ended up with devices in our homes that we could talk to get it to do reasonably simple tasks.

(Plays Amazon Echo promotional video).

Speaker 1: Introducing Amazon Echo.

Alexa: Hi, there.

Speaker 1: And some of Echo’s first customers. Echo is a device designed around your voice.

Speaker 1: Simple say, “Alexa,” and ask a question or give a command.

Speaker 1: Alexa, how many tablespoons are in three-fourths cup?

Alexa: Three-fourths cups is 12 tablespoons.

Speaker 1: Echo is connected to Alexa, a cloud-based voice service, so it can help out with all sorts of useful information right when you need it.

Speaker 2: To me, it’s bringing technology from Iron Man and Tony Stark’s house into your own.

Speaker 2: Echo can hear you from anywhere in the room, so it’s always ready to help.

Speaker 3: I can have the water running, I can be cooking, the TV can be on in the back room, and she still can hear me.

Speaker 1: It can create shopping lists.

Speaker 2: Alexa, add waffles to my shopping list.

Speaker 1: Provide news.

Alexa: From NPR news in Washington …

Speaker 1: Control lights.

Speaker 2: Alexa, turn on the lights.

Speaker 4: We use it to set timers. We use that feature all the time and that’s one that’s specifically helpful, I think, uniquely to blind people.

Speaker 1: Calendars.

Alexa: Today at 1:00 pm is lunch with Madeline.

Speaker 1: And much more.

So, a device that actually helps a lot of us out in a lot of different ways.

I use it very much as the lady just said there, to set timers.

I find it really useful. The shopping list, too.

Not because I can’t see, but actually because I have a lousy memory.

So, these devices are using a whole range of different artificial technologies and intelligences to help us out as human beings.

There is perhaps a slight problem with the Echo devices, and indeed all of these devices, is that they all use a single voice.

They all sound female, and those voices are not necessarily entirely suited to the thing you might want to actually do with them.

So for example, there’s a piece of text on-screen which is the opening sentence or so from Stephen King’s It, one of the most terrifying books I have ever, ever read.

But if you ask Alexa to read it, this is what it sounds like.

(Plays audio recording of Alexa reading Stephen King).

The terror, which would not end for another 28 years, if it ever did end, began so far as I know or can tell, with a boat made from a sheet of newspaper floating down a gutter swollen with rain.

Now, I don’t know if it’s me, but that doesn’t quite fill me with the terror that I was hoping for at that point.

What a lot of people don’t realize is that you can actually change the way technologies like this will sound when they respond back to you.

It’s a technology known as speech synthesis markup language, SSML.

And most of the speaking technologies, Echo and Google Home for example, all use this.

So we can change things like the voice that gets used, and the emphasis that it places on words, to make that piece of text sound like this.

(Plays SSML audio recording of Alexa reading Stephen King).

The terror, which would not end for another 28 years, if it ever did end, began so far as I know or can tell, with a boat made from a sheet of newspaper floating down a gutter swollen with rain.

Now, it’s not perfect, but at least it’s in the right sex of the character of the person who is actually speaking at that point in the book, and it kind of has a bit of that Hollywood movie that you might expect. It’s certainly one up, I think, on chirpy Alexa reading the opening scene.

We can see another one, if we take another clip from one of my favourite films. Alexa reads it like this.

(Plays audio recording of Alexa reading the Princess Bride).

Hello. My name is Inigo Montoya. You killed my father. Prepare to die.

But if we change the voice again and the accent this time as well and we change some of the timing, the breaks and pauses in the sentence, we can get a little bit closer to something a bit more like it should be.

(Plays SSML audio recording of Alexa reading the Princess Bride).

Hello. My name is Inigo Montoya. You killed my father. Prepare to die.

And there are other things.

We can take another example here, from Winnie the Pooh.

Now, if we ask Alexa to read this, it’s not too bad. At least in a British Echo, she’s got the right accent, and it’s not too bad.

(Plays recording of Alexa reading Winnie the Poo).

Piglet sidled up to Pooh from behind.

“Pooh,” he whispered.

“Yes, Piglet?”

“Nothing,” said Piglet, taking Pooh’s paw. “I just wanted to be sure of you.”

But there’s still something a little bit lacking, and that’s kind of a sense of Piglet, really.

And we can do this as well with the Alexa. We can change the voice, but we can also do things like change the volume and the pitch of certain chunks of the text, so that Piglet’s voice comes out sounding, well, hopefully a bit more Piglet.

(Plays SSML audio recording of Alexa reading Winnie the Poo).

Piglet sidled up to Pooh from behind.

“Pooh,” he whispered.

“Yes, Piglet?”

“Nothing,” said Piglet, taking Pooh’s paw. “I just wanted to be sure of you.”

So, having our robots, our artificial intelligence be able to communicate back with us in ways that seem right, make us comfortable, is another kind of really important piece of the puzzle.

And that brings us now to our last law, and …

Oops. Shoot. Sorry.

Piglet sidled up to Pooh from behind.

“Pooh,” he whispered.

“Yes, Piglet?”

“Nothing,” said Piglet, taking Pooh’s paw. “I just wanted to be sure of you.”

It’s because I really like that clip, why I did that.

So, the third law.

The robot must protect its own existence as long as that doesn’t come into conflict with either of the first two laws.

Now, this is all very well, but this is where the real human angle comes into this, perhaps more than ever.

Because essentially, artificial intelligence is still human intelligence.

In 2013, IBM’s Watson, its AI, learnt to swear because the humans responsible for it thought they’d try to make it speak more easily and informally and more familiarly with humans, and so they fed it the Urban Dictionary.

(Audience laughing).

See? You get it. Lots of really, really smart scientists at IBM apparently didn’t, and two days later, it started swearing like nobody’s business and they had to wipe its memory. Yeah, you got to like it.

In 2016, Microsoft released a Twitter bot with AI, Tay AI, and they let it loose on Twitter.

And again, as human beings, we’re just like,

“Why? What on Earth would you do that for?”

But they did, and somewhat unsurprisingly, several days later, it turned into a completely nasty, every -ist and -ism driven thing you could possibly thing of and they took it offline and hopefully wiped it off the face of the planet.

In 2017, it turned out that Google’s image recognition AI had pretty terrible gender bias.

It was really appalling at recognizing women and people who identified as nonbinary, because most of the data that the humans had fed it had been white men.

It was later found too to have racial identification problems, precisely because the data set contained far more white people than it did people of colour or other nationalities from different countries.

And so it was pretty terrible at recognising anything outside of the data set that it had been fed by the people that created it.

Another one in 2017, there’s a Hanson robot called Sophia.

Now, this robot was being promoted as what would ultimately become a companion for people, someone designed to be reassuring and to help perhaps elderly people or people with cognitive disabilities who maybe get anxious, all of those kind of things.

And in this particular promotion, Dr. Hanson, Robert Hanson, the guy who invented her, was going through a great spiel asking her if she could remember to do this and remind so-and-so to take their medication.

And he jokingly at the end and said,

“And could you just destroy all humans?”

To which she replied,

“Okay. I’ll destroy all humans. I’ve added that to my to-do list.”

Brilliant. And we laugh, but … But.

And then in 2018, Amazon Recognition, its image recognition API, they fed it a picture of all of the American Congress at the time.

And it, perhaps quite accurately, identified most of them as being criminals.

But the inherent problem is, of course, that the artificial intelligence is really not that intelligent at all.

It’s a lot dumber than all of this might have led you to believe.

It’s vulnerable to the information that it’s given by the humans that create it. The data that we give it is a huge part of that intelligence.

Ultimately, though, even the neural networks that can do some learning for themselves, they’re still constrained by the parameters put in place by the people that created them and the data that they’re given.

So artificial intelligence and by proxy, our robotics, are really still extraordinarily closely tied in with us, in far more ways than just their ability to see when we can’t, their ability to hear when we can’t, to speak when we can’t.

Together, we are quite remarkable, I think, but we do have to remember that ultimately, it’s us that is the intelligence, for the time being at least, behind the artificial and the robotics.

And I think Microsoft captures this far better than I’m able to.

(Plays Microsoft AI promotional video).

Behind every great achievement, every triumph of human progress, you’ll find people driven to move the world forward.

People finding inspiration where it’s least expected. People who lead with their imagination.

But in an increasingly complex world, we face new challenges.

And sometimes it feels like we’ve reached our limits.

Now, with the help of intelligence technology, we can achieve more.

We can access information that empowers us new ways.

We can see things that we didn’t see before, and we can stay on top of what matters most.

When we have the right tools and AI extends our capabilities, we can tap into even greater potential, whether it’s a life-changing innovation, being the hero for the day, making a difference in someone’s future, or breaking down barriers to bring people closer together, intelligence technology helps you to see more, do more, and be more.

And when your ingenuity is amplified, you are unstoppable.

Thank you.